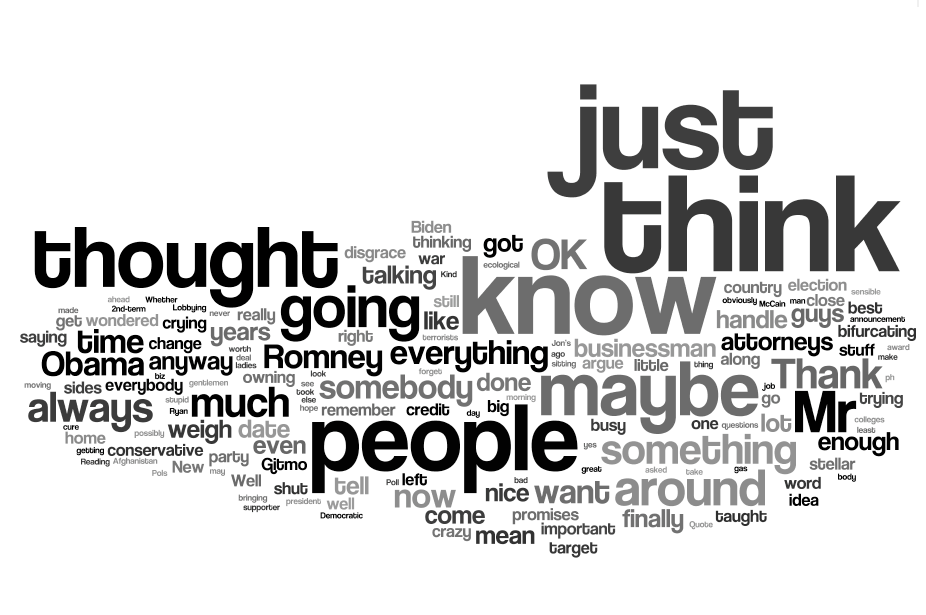

- Think. Thought. Just. Know. Maybe. People.

These were the heaviest used words in Clint Eastwood’s speech to the Republican National Convention on Aug. 30 – the same night that Republican presidential nominee Mitt Romney would later speak.

Eastwood’s speech is now known for him lecturing an invisible President Obama, represented by an empty chair, which has spawned an entire meme culture based around “invisible Obama” and the metaphor of emptiness. It is also known for being hardly coherent but wholly hilarious.

The distant reading in fact proves Eastwood’s spontaneity and ambiguity, and possible confusion. The most used words are vague contradictions – “think” and “know,” for example, go against each other as opposing definitive forms. He also used the word “maybe” several times, also hinting toward lack of clarity and further confusion.

None of these words are at all complex. In fact, many of them would probably be in an eight-year-old’s vocabulary – save “bifurcating,” which may have come out of left field. This shows either unpreparedness, a lack of comfort when speaking in front of large crowds (though since Eastwood is in the movie business, that may not be the case) or a simple state of confusion.

However, this distant reading doesn’t even hint to the hilarity within the context of the speech. It is just funnier reading the transcript with the broken phrases, the dashes, the self-interruptions, or watching the video where he actually lectures the empty chair. The distant reading also doesn’t provide the context: the RNC, on a night after hurricanes throughout the south and just minutes before the highly anticipated speech from the nominee is due to happen. The distant reading misses out on this muddled speech in the context of the high-tension situation.

I wish there was a new distant reading tool in situations where the text is also on video (such as a speech, a reading or a monologue from a movie) that could piece together screenshots of the speaker or the scene and match it to each word used. For example, every time Eastwood said “maybe,” scrolling over the word on the visualization could bring up a series of screenshots from the video of the speech, which would be all the times in the speech that he said the word “maybe” and would have his facial expression when saying it. Or, better yet, if there was a video software that could recognize individual words in speeches and piece them together, so for the word “maybe,” you would have also a video of Eastwood saying “maybe” each time in his speech (just one-word videos all pieced together in chronological order).

For pieces of text that are not video format, it would still be nice to have the contextual element as part of the visualization. For long pieces of text, perhaps words could be linked to a list of settings where the word was said to give a better picture of why the author chose to use that word at a certain time, and give the reader insight into context, which is – as seen in the visualization of the Eastwood speech – a very important component of storytelling.

I also visualized Paul Ryan’s RNC speech, out of curiosity. The distant reading visualization is of Paul Ryan’s speech to the Republican National Convention on Aug. 29, 2012. Ryan spoke in a highly anticipated speech to the convention crowd the night before Romney was slated to speak at the convention. This is a convention that “competed” for national attention with hurricanes and storms in the southern part of the country, and was viewed on some news channels in a split-screen format – a move by the media that was criticized by many.

The visualization suggests that Paul Ryan’s speech wasn’t out of the ordinary in that of his typical political counterparts, using words such as “president,” “Romney” and “Obama,” but also “life” and “country,” signifying his speech was personal, as well.

My extra credit relates to the second Wordle I did about Paul Ryan’s RNC speech and looks into the Paul Ryan Gosling meme. http://www.youtube.com/watch?v=fJfMEiVGl6M

This is fantastic! I love your idea for a word cloud that drills down to specific moments in a video–hope someone makes that!

Thanks! I hope so too! It would be a beautiful way to look into videos – especially in times of political tension, such as the debates – and gather the inherent meaning from them. I wonder if tools like this and distant readings in expansions of social and multimedia such as Twitter could eventually impact the outcome of debates, and maybe even results of elections.