My (detailed) experience as a novice encoder. I had no prior experience with programming before this project.

Getting Started

I wouldn’t have been able to start without the Digital Humanities boot camp or the first group meeting, where I was able to set up Oxygen, my account on github.com and download and install the Github client for Mac. In spite of that, when I was working on my own, I experienced technology anxiety, which I describe as unease caused by the various new programs required and fear that all of those elements would not become coherent. There was a slight error when my Github client was downloaded, the sg-data files downloaded to my documents folder which caused a moment of panic when I couldn’t find them. I soon realized that because this sense of unease was simply a part of the project. As I worked, the project did become coherent, but there were issues along the way. The majority of the work was learning the encoding language, which was precisely like learning a new language. When I was unsure of how to encode an element, because I am a novice, the SGA Encoding Guidelines didn’t always answer my questions—the answers were certainly there, but the explanations did not always make sense to me. Our team’s extensive Google document answered many of my questions and I was thankful that this was a collaborative project.

Markup Process

When doing the markup, I would start by looking at the manuscript image to get a feel for the elements of the page. Some pages were fairly “clean,” meaning there was not a lot to include in the markup besides transcribing the words on the page. The bulk of the encoding, for our purposes, focused on cross outs. I also liked to start by looking at the manuscript to keep the spirit of the project—the manuscript itself—as the focal point. I did utilize the Frankenstein Word Files (transcriptions of the text of the manuscript) and these files were invaluable. Deciphering Mary Shelley’s handwriting would have at least doubled, if not tripled the amount of time the project would take and I’m not sure that I always would have be able to make logical sense out of the words in the manuscript images. That being said, I would still check the transcription page against the manuscript image. I think the most challenging aspect of the markup was learning the necessary tags, especially as the tags continued to evolve. The tags became easier as the encoding progressed because they became more familiar. While encoding, there were also questions of specificity—encoding is meant to capture what is on the manuscript page but how detailed that can and should the markup be? When a cross out appeared, the encoding was marked by: rend=”overstrike” but that is not a particularly descriptive tag. There were variations in the cross-outs that the tag did not describe for example, one that used three diagonal lines, or one with two horizontal lines. I had a few pages with a fair amount of marginal text and I initially struggled with how I would properly encode an instance such as: the marginalia was aligned with lines 3, 4, 5, for example, it might actually all be part of line 2. That turned out to be too specific for our purposes, but it still seems like an important thing to note. I also wondered how important it is to describe the non-text elements of the page such as inkblots, when the handwriting was clearer or less clear (not illegible—there were instances when it seemed neat or messier), when the quill had fresh ink or, how best to describe those doodles, and et cetera. Many of these concerns seem subjective, the doodles especially so, my way of seeing a doodle may be very different from the way another person would view it. It does not seem accurate to leave these elements out, but it seems their level of importance depends on what the goal of the encoding is. I also noticed that I had to resist the temptation to act as an editor by fixing spelling or grammatical errors. It seemed odd to transcribe misspelled words, but I had to accept that my role was not that of an editor.

Some frustrating aspects of the project:

For all the initial difficulty of learning the markup language, the encoding seemed stress-free when compared with the frustrating technological issues The schema did change as the project went along, but that was more something I had to be careful to pay attention to than actually frustrating. One of the first issues was when the document(s) wouldn’t validate in Oxygen, which was required in order to push them to GitHub. I didn’t know the language of Oxygen well enough to know what caused the problem, which made it difficult for me to fix on my own. Fortunately, I could consult my group and found that sometimes, a < need to be moved to a different line. I think an encoder would need more experience with Oxygen in order to troubleshoot effectively. Other than the validation issue, Oxygen was relatively straightforward. My main frustrations were with the Github client for Mac—there was an issue where the client would not allow me to push the files I worked on, instead it wanted me to push every file in the sg-data folder. I know that other people experienced this issue and I do not know the exact cause, only that the experienced encoders at MITH thankfully fixed it. This seemed like a culmination of my technology anxiety—it seemed as if my encoding might be altered permanently or lost all together. My fears were not realized, and might be easily alleviated if the issues with the Github client could be eliminated.

The “emotional side”

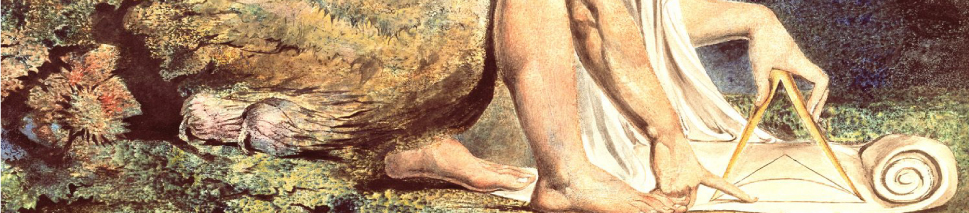

The most rewarding aspect of the project was working closely with the manuscript images. I know the images were digital copies, but that did not prevent the experience from being thrilling. In fact, when I first looked at my ten pages, I felt a shiver of disbelief and awe—I was looking at the actual manuscript of Frankenstein! I did not think it were possible for anyone but scholars of the manuscript to have access to it. In addition to the thrill, I also felt closer to the text. What I mean is, I think there is often a distance between the author and the readers, especially when the author lived in a previous century, and this can turn authors into figures of isolated and independent genius. It is sometimes hard to appreciate that their writing reflects the real struggles of their times if the authors themselves do not seem as if they had been real people. I admit to feeling this way about Mary Shelley and seeing the spelling, grammatical errors, as well as the various cross-outs and changes in the manuscript made her seem more human. This also allowed me to feel closer to the manuscript—I could appreciate that it had been a fluid piece of art in progress, rather than the permanent finished project it appears to be when reading the novel. Though Frankenstein is likely not in any danger of being forgotten, digital encoding could be wonderful way to bring attention and appreciation to neglected texts.

Questions and thoughts for the future:

I wondered if it matters how much familiarity an encoder has with the text? I found that having familiarity with the novel made the process easier because I never felt lost in the text. I think encoding a text I had never read before, especially if it were an isolated 10 pages like in this project, would have been extremely difficult. I came to the conclusion that familiarity with the text is necessary if not completely crucial. If this is accurate, then it seems ideal that students of literature also be trained in encoding.

Thoughts on how to develop the tags further

My sense is that there should be one standardized markup that is perhaps closest to resembling the physical page of the manuscript in that it captures all of its elements. I wonder if there is a way to make a standardized mark-up—can any two (or more) encoders ever completely agree on what precisely needs to be included? I think it is possible to reach an agreement (through on on-going process) and from there, individual researchers could do their own encoding work and create tags for monstrosity, for education, for women’s issues, for anything a researcher would look for in Frankenstein. This might create many versions of encoding the novel, but the versions could be used as a tool for analysis.

My suggestions for change

Our group was fairly large and while the discussions via the GoogleDoc were invaluable, I think more face-to-face interaction and/or smaller groups, along with some in-person encoding might prevent going astray in the encoding and alleviate of the frustrations with the software. When we worked in our quality control checking groups (I worked with two other group members) it seemed like an ideal dynamic, we could easily check each other’s files, meet in person, and carry-on an email correspondence. As for the technology anxiety, I’m not sure that anything can be done about that besides gaining experience and perhaps a software change (likely beyond our control) to the Github client for Mac.

Before this project if I had any thoughts about how digital archiving was done, I would said it was just a matter of storing the manuscripts digitally. While it is important to preserve manuscripts digitally as well as to allow a larger audience access to them, this would not require a specialized language. I see the special language of textual encoding as a way to engage a text, to describe and analyze it while preserving it. This adds a richness and depth to literary study and analysis while also keeping literature current. I understand that there are powerful digital ways to study literature that I was admittedly ignorant of before this class.