I’m thinking of my “favorite aspect of technology” in terms of later class discussion and the syllabus in general, primarily the definition of technology—whether it must be utilitarian and whether it can have agency.

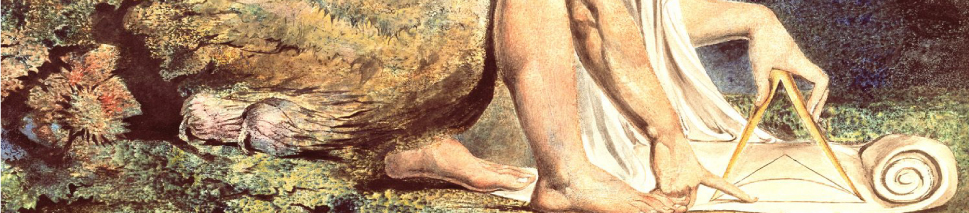

It seems to me that the “glitches” I enjoy might appeal to me because they make the machine feel fallible and therefore human. Mixing up people’s pictures, mis-guessing the next word—those are things I might do. And it’s pretty human to sympathize with a consciousness that feels to be “like me.” And there it is–these mistakes make me recognize technology as a consciousness. And it makes me giggle! Humor after all, seems to be a mix of that which is delightful and that which is terrifying. It’s like the uncanny. The familiar and the unfamiliar, in the same space. The shiver, or laugh, seems to emanate from the inability to understand which one is covering the other—which is the real and which is the costume. Is the technological consciousness friendly and familiar? Or is it taking over? (Could the takeover be parental or for our own good, as suggested by Brautigan? I feel the shiver/giggle again at that idea, which seems to repeat the same uncanny trick.)

And now that Freud is brought into my ramblings, I can talk about the other effect of those charmingly frightening glitches. Regarding a human consciousness, we tend to believe that slip-ups reveal truth. Whether we learned the technique directly from Freud, from popular culture’s appropriation of his ideas, or whether the instinct is much older than either, we tend to watch for the subconscious to poke through and reveal the great truth of who we are. In this way, a computer glitch becomes the technological subconscious poking out at me.

I mentioned in class that I was delighted by the way my iPhone mixes up my friend’s pictures. It’s funny because it’s silly. It’s funny because it brings technology down to a human level. And it’s terrifying because it brings human beings down to the level of the machine. This happens because of the recognition of consciousness in what I want to be only a tool. (I see the errors as more evidence of human-like consciousness, by the way, than a computer’s common trick of, say, computing.)

In the particular glitch I mentioned, the slip up goes further. It tells me that the computer can’t tell people apart. It mixes us up! We are numbers or objects to the brain that we have built. So as I recognize a frightening humanity in the machine, I know that it does not recognize mine.